We previously discussed how we visualize LinkedIn site performance so we can rapidly detect and respond to failures as they start happening. Today, Priyanka, Venkat, Veena and Hien will tell you about how we test Frontier, LinkedIn's in-house web framework, to catch problems before they reach the live site.

Frontier Framework: An Introduction

Frontier is a UI framework developed at LinkedIn to build scalable, efficient, and reliable web systems. Frontier has several advantages over the traditional MVC frameworks, including a rich user experience, fault tolerance, sophisticated use cases, dynamic adaptation to display or business logic, and latency sensitivity. In addition, Frontier has reusable and testable entities, is heavily cached, and is service oriented. These factors have made the Frontier framework the standard for prime user-facing systems at LinkedIn.

The Frontier framework is built using various entities that work together to serve a user request. Some of the important entities include components (placeholders for displaying page content), dependencies (fetching data from the middle tier), content services (middle tier), URL aliases and routers (routing decision-based application logic), and mutators (for form display and processing).

The Frontier framework uses the concept of early flush, which is to flush the static content (css/js) as early as possible in contrast with the traditional model where content is rendered only when all the data (both static and dynamic) is assembled after the backend service calls. Early flush decreases page load times. The Frontier framework also provides timing flags to tell the middle tier whether or not it should collect timing data to measure the SLA.

Developing Fronttest

The Frontier framework today comprises more than 100 Java classes and has a large amount of active development with enhancements incorporated each day. Given this activity, certifying a framework change has become more and more challenging from the Quality perspective.

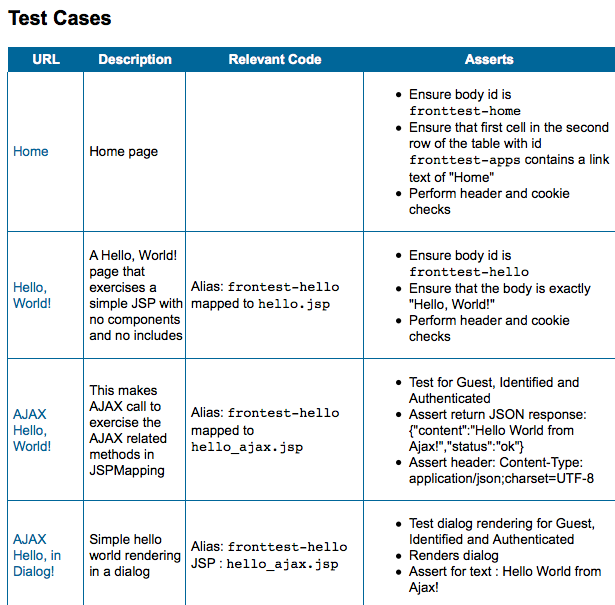

Testing a Frontier feature requires not only asserting whether the desired output is rendered on the page per a user request, but also verifying the web application’s response from all authentication levels, with combinations of early flush and timing parameters, and with the right cookies and response headers being returned.

The extent of the testing required a very complete custom Frontier test suite, which exercised all Frontier features with the combinations needed to provide a complete test for a feature change, as well as the impact on the rest of the web applications (regressions).

Certifying Crucial Frontier Changes

We had to look at what types of testing would answer the framework needs.

First, we evaluated whether or not unit and integration tests would fill the need. Because much of the functionality is user interface/browser dependent, unit and integration tests fall short of complete certification.

We then looked at performing a complete regression of the web application using the new change. There was a possibility that there might be some flows/code paths or unique conditions that might not be exercised by a web application built with the framework. This could potentially lead to a loophole in testing. Additionally, verifying the response headers, cookies, and all authentication states for each and every web application is overkill for testing. It is also not in principle with the concept of traditional blackbox testing, where the focus is on the end user’s point of view.

Solving the Certification Issue

To address this issue, we came up with Fronttest, a test web application, created solely to exercise all features of Frontier. We believe testing with this web application, from both whitebox and blackbox perspectives, will provide a well-rounded testing baseline.

A complete test plan is difficult to detail here, but the following list provides some of the important features that Fronttest covers:

- Request dispatching: Comprises all the control logic about which route should be taken by an application based on user inputs and application logic. Routing also comprises various web flows (sequential, single thread of steps) and multiple format (JSON/HTML) routes taken by an application based on different scenarios.

- UI Components: Includes all the UI components such as dialogs, menus, scroll bars, select labels, input text fields, and labels that constitute any web page.

- Content rendering: Page content is represented in Frontier framework by reusable modules that are populated by the middle tier and referenced by the presentation tier. They can be simple or nested, secure or non-secure, shared between many pages, and critical or low priority. Content can be independent or dependent on other content (requiring additional computations).

- Form processing with parameters: Includes constructing and rendering of forms on the page with prefill options, mutating the data (as per application logic), and saving it after a user submit. Form submits are all handled by AJAX for faster processing and decreased wait time. This also includes error handling and displaying success or errors on the page (per the business logic).

- Shared modules between legacy frameworks and Frontier: Represents user interface-related code that is shared between Frontier applications and its predecessors.

- Instrumentation: Comprises timing metrics generated by Frontier to measure SLAs and for performance analysis.

- Cookies: Includes session management, personalization, and tracking for dynamic web systems.

- Others: Various other attributes of Frontier, including page generation (simple/nested), lifecycle of various page entities, secure and non-secure pages/entities, pre- and post-conditions for an entity’s processing, Frontier administration console, tracking events, and so on.

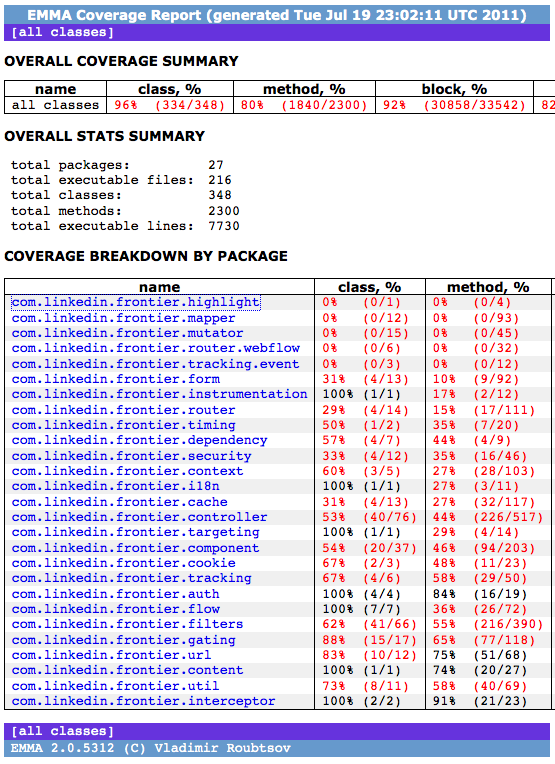

Emma

We used Emma Java code coverage to ensure all the code paths were exercised. We employed various traditional whitebox approaches such as line coverage, branches/loop testing, and exceptions/error cases simulations. Emma generates a clear and concise report summary of all the classes, packages, and methods being covered with percentages, color schemes (green and red) to visually identify the lines covered or skipped. The report also provides easy navigation and click-through links to view package, class, and function in detail.

Selenium

We used Selenium (web testing tool) with Ruby to test the Fronttest web application from the UI blackbox perspective; that is, to ensure that all UI elements (for example, images, text, form elements, dialogs, and menus), web links, form submissions, and success and error conditions functioned correctly. We used various Selenium APIs to inspect/parse the HTTP responses to assert whether the response headers (pragma, cache-control, expires) and cookies (browser, visit, and some custom Linkedin cookies) were set/returned with correct values.

A combination of previously mentioned whitebox and blackbox testing techniques give us an insight on how a change or an enhancement in the framework would impact Fronttest. After this is certified, we could have confidence for whether or not the change is functioning. We could then follow the usual application-specific regression to certify that the new change works. If necessary, test code and test cases can be added in Fronttest to ensure the change is well covered.

Achievements and Future Goals

We’re working hard to ensure great test coverage for our Frontier framework.

- Based on 117 use cases, with 3 authentication states to test in 2 timing modes—implying (117 * 3 *2) test cases—we accomplished ~96% code coverage of the framework with the remaining 4% being supporting code that does not require testing.

- We built automated regressions to help minimize the effort and time required to certify a crucial change, increase the software quality, and reduce time-to-market.

- We certified many framework changes, request lifecycle enhancements, i18N, expansions to support different platforms, client-side rendering, and so on, with quality and deployment confidence.

- Both aspects of Fronttest (Java and Selenium) are currently being maintained and enhanced by the Quality team. The separation of activities between development (coding the features) and Quality (certifying those features), achieves maximum quality.

- We will work to incorporate all new Frontier features in Fronttest.

- We plan to extend Fronttest to the JRuby and Grails extensions of the Frontier framework.