Prototyping Venice: Derived Data Platform

August 10, 2015

This is an interview with Clement Fung, who interned with the Voldemort team last year and liked LinkedIn so much that he decided to come back this year for another internship with another team. Since he was around, we took the opportunity to discuss the work he did with us in the fall of last year.

Felix: Hi Clement! Can you start by telling everyone a bit about yourself?

Clement: I am a fourth year Engineering student from the University of Waterloo in Canada. In the fall of 2014, I completed a software engineering internship with the Voldemort team.

Felix: What did you work on in your internship?

Clement: For the entirety of my internship, I worked on the design and prototype of a derived data serving platform called Venice.

Felix: What do you mean by derived data, and why call the project Venice?

Clement: It means data which is derived from some other signal, as opposed to being source of truth data. Aggregate data such as sums or averages computed from event streams qualify as derived data. Relevancy or recommendation data crunched by machine learning algorithms also qualifies. We came up with a bunch of project names but none were catching on, and then I mentioned Venice and it stuck. The city of Venice is full of canals, and our project is all about ingesting streams, but that’s as far as the thought process went :)

Felix: Cool! What problems does Venice intend to address?

Clement: A common use case here at Linkedin involves the computation of derived data on Hadoop, which then gets served to online applications from a Voldemort read-only store. Currently, transferring data from Hadoop to Voldemort is done by a process called Build and Push, which is a MapReduce job that creates an immutable partitioned store from a Hadoop dataset. This dataset needs to be re-built and re-pushed whenever new data becomes available, which is typically daily or more frequently. Naturally, this design results in a large amount of data staleness. Moreover, pushing more frequently is expensive as the system only supports pushing whole datasets. Venice was created to solve these problems.

Felix: What are Voldemort read-only stores currently used for?

Clement: They are used for a bunch of things! The Voldemort read-only clusters at LinkedIn serve more than 700 stores, including People You May Know and many other use cases. The Hadoop to Voldemort pipeline pushes more than 25 terabytes of data per day per datacenter.

Felix: How does Venice differ from Voldemort?

Clement: Currently, Voldemort actually exists as two independent systems. One system is designed to handle the serving of read-only datasets generated on Hadoop, while the other is designed to serve online read-write traffic. Voldemort read-write stores can have individual records mutated very fast, but do not provide an efficient mechanism for bulk loading lots of data. On the other hand, the read-only architecture was a clever trick to serve Hadoop-generated data half a decade ago, but with today’s prevalence of stream processing systems (such as Apache Samza), there is a lot to be gained from being good at serving streams of data which can quickly change one record at a time, rather than the bulky process of loading large immutable datasets. Venice aims to solve both of these use cases, large bulk loads and streaming updates, within a single system.

Felix: Sounds great! So can you describe to me what the Venice architecture looks like at a high level?

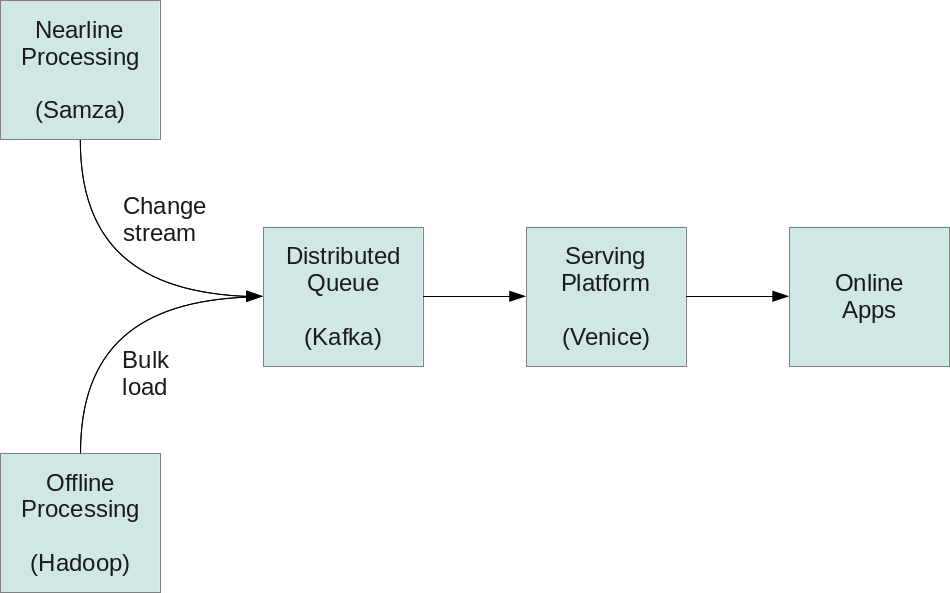

Clement: Venice makes use of Apache Kafka’s log based structure to unify inputs from both batch and stream processing jobs. All writes are asynchronously funneled through Kafka, which serves as the only entry point to Venice. The tradeoff is that since all writes are asynchronous, it does not provide read-your-writes semantics; it is strictly for serving data derived from offline and nearline systems.

Felix: This design sounds similar to the Lambda Architecture, doesn’t it?

Clement: Venice does offer first-class support for Lambda Architecture use cases, but we think its architecture is much more sane to operate at scale. For example, in typical Lambda Architectures, there would be two distinct serving systems: one for serving read-only data computed from a batch system, and another one for serving mutable real-time data computed from a stream processing system. At query time, apps would thus need to read from both the batch and real-time systems, and reconcile the two in order to deliver the most up to date record.

In our system, apps only need to query a single system, Venice, since the reconciliation of multiple sources of data happens automatically, as data is ingested. This is better for latency and for stability, since it relies on just one system being healthy, instead of being at the mercy of the weakest link in the chain.

Of course, Venice is not limited to Lambda Architecture use cases. It can also be leveraged for use cases that serve only batch data, or only stream data, the latter of which is sometimes referred to as the Kappa Architecture.

Felix: I see. What parts of the project did you work on?

Clement: I was very happy to have been given the chance to work on the design in the first couple weeks of my internship. I was involved in several design meetings in which we delved deep in the details of how Venice would behave in certain scenarios. Once that was completed, I worked on the implementation of the Venice server and client, both of which were built from the existing Voldemort structure. I even managed to work on implementing the Hadoop to Venice Bridge (which replaces Build and Push) and gathering some preliminary performance benchmarks in my last couple of weeks.

Felix: Looking forward, what will you take away from this internship?

Clement: I am really amazed with the amount that I have learned when it comes to software engineering; several topics relating to distributed systems specifically centered around Kafka, Voldemort and Hadoop. I learned to work in an agile environment, while still adapting to the changing needs of the project. I really couldn’t have asked for a better experience, it was truly a 10/10.

Felix: Well, it was great to have you on board as well! Any final thoughts?

Clement: Just want to lastly say a huge and resounding thank you to all the members of the Voldemort team who mentored and guided me throughout the process: Arunachalam Thirupathi, Bhavani Sudha Saktheeswaran, Felix GV, JF Unson, Mammad Zadeh, Lei Gao, Siddharth Singh and Xu Ha. I had a great experience and learned so much; hopefully we can collaborate on projects again in the future!

Felix: I sure hope so! Good luck with the rest of your studies and future projects, and don’t forget LinkedIn’s Data Infra team is always looking for smart people to work on cool projects :)