ParSeq: Asynchronous Java Made Easier

ParSeq is LinkedIn’s framework for writing asynchronous Java, and powers many of LinkedIn’s largest web services. It has proven invaluable for developer productivity, as well as essential for web service observability. ParSeq is well-adopted at LinkedIn in both the frontend and midtier services, and makes it so that engineers and developers can write code that’s easy to maintain without having to chain asynchronous callbacks together to fulfill a workflow. While ParSeq has been quietly open sourced for a few years, the purpose of this post is to introduce it to the wider engineering community and end with a look towards future features.

Motivation

Early in our history, LinkedIn’s web services made synchronous requests to other LinkedIn web services. The process involved pulling the request apart at the frontend, making sequential requests to multiple backends, and then putting the original request back together. Today, we make calls using asynchronous APIs, which eliminates the need to block threads while making remote calls, and lets us parallelize them, thus reducing latency.

Writing and using asynchronous code, however, is tricky. It often adds accidental complexity that is unrelated to app or business logic code, but rather caused by the asynchronous mechanism. Asynchronous operations can finish in different orders because the underlying operations can take varying amounts of time. This is due to both expected causes like fetching more data and executing more time-consuming operations, and unexpected and problematic causes such as garbage collection or server load. This behavior makes asynchronous operations hard to test and adds elements of non-determinism to asynchronous code. Meaningless stack traces make debugging difficult, and coordinating callbacks on various threads is challenging. Furthermore, developers must always consider the issue of thread safety. These factors, among others, make asynchrony quite complex.

Requirements

To address the challenges of asynchrony, we did a study in 2012 of many open and closed source solutions. However, none of the options that we examined fit all of our criteria. In the end, we drew inspiration from our research and built ParSeq to make programming using asynchronous methods in Java easier. ParSeq, which stands for parallel-sequential, is open sourced, actively maintained, and meets all of the key requirements we had identified after our research.

In the beginning, our requirements for ParSeq included:

- A simple API for coordinating asynchronous tasks

- Serialized computation

- Built-in tracing and visualization

- Error propagation

- The ability to perform recovery

- The ability to reuse asynchronous code

We needed serialized computation to coordinate states across multiple steps in a workflow. Serialized computation provides memory consistency guarantees for those steps and also makes it easier to reason about invariants entering and exiting steps.

We also needed built-in tracing and visualization to determine the source of an issue, should one arise. Tracing provides important information, such as when the steps of a workflow began, in what sequence, and how long they took. In a similar vein, features such as error propagation and the ability to perform recovery were critical for resolving bugs.

Finally, we required a simple, fluent API and the ability to reuse asynchronous code in other workflows for heightened usability and accessibility.

Example

The following example demonstrates how to use ParSeq to fetch several pages in parallel, and combine them once they’ve all been retrieved. In this code snippet, we make an asynchronous call to retrieve a single page.

We use map method to transform Response into the String and andThen method to print out the result.

To fetch a few more pages in parallel, we first create a helper method that creates a task responsible for fetching page body given a URL. Then, we compose tasks to run in parallel using Task.par. In the following example, the homepages for Google, Yahoo, and Bing are all fetched in parallel while the original thread has returned to the calling code.

We can do various transforms on the data we retrieved. Here's a very simple transform that sums the length of the three pages that were fetched:

The sumLengths task can be given to an engine for execution and its result value will be set to the sum of the lengths of the three fetched pages.

In our example, we added descriptions to tasks, like map("sum", (g, y, b) -> g + y + b). Using ParSeq's trace visualization tools, we can view the execution of the plan. The following waterfall graph shows task execution in time, with all GET requests executed in parallel.

Sample data used

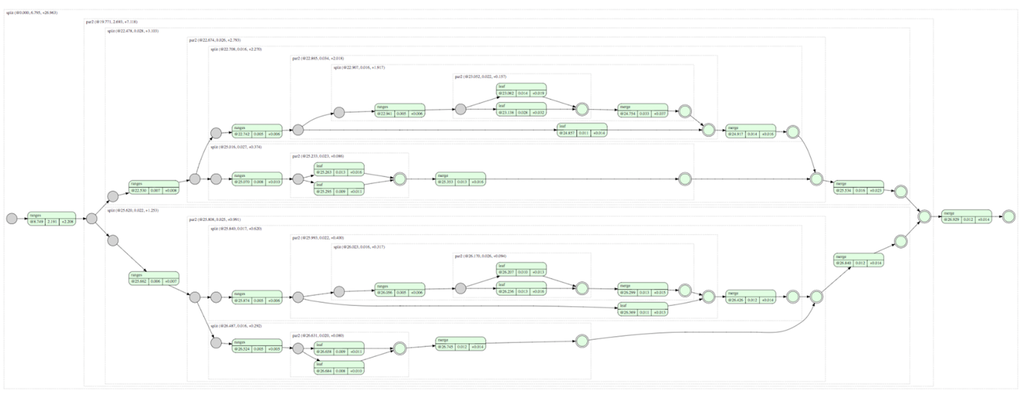

A Graphviz diagram best describes relationships between tasks: