At LinkedIn, quality is the gatekeeper for any product release. Last week, we told you how we test Frontier, LinkedIn's web framework. Today, we'll walk you through our testing lifecycle.

If we deliver on time, but the product has defects, we have not delivered on time. -- Philip Crosby

Testing Lifecycle

Here is what the testing lifecycle looks like at LinkedIn:

1. Requirement Gathering

The Product team defines functional requirements and the designers create wireframes. After the designs and the Product Requirements Document (PRD) are created, everything is reviewed with the whole team, including developers and test engineers.

2. Test Planning

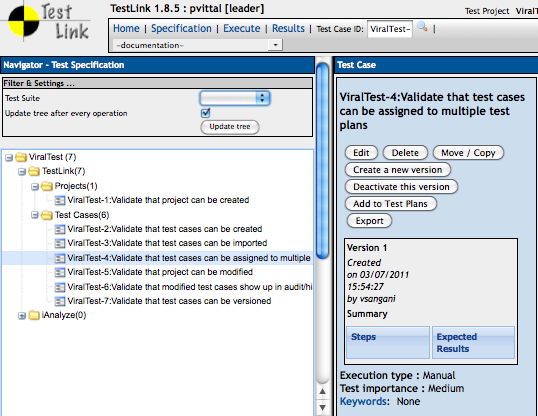

The next step in the lifecycle is to plan for all testing for the product or feature, including:

- Creating the test plan

- Writing the test cases

- Prioritizing the test cases as high, medium, or low, so that they can be run based on the scope of the project

- Holding a test plan and test case review meeting to ensure communication and a full understanding of the testing scope

3. Functional Testing

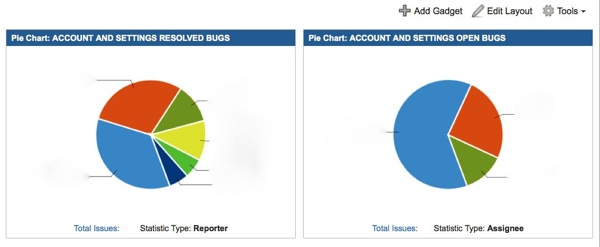

Functional and regression testing begin after the test planning is complete. This testing includes:

- New feature testing: browser compatibility testing using VMWare (Firefox, Safari, Chrome, IE).

- Bug cycle: filing bugs in JIRA. Fixing and verification of bug fixes.

- Regression testing: to ensure nothing in the existing functionality is broken. We use our automated test suites for this testing.

- A/B Testing: we release the feature in steps. First it’s released to the internal group, then to the company so we catch all edge cases. After all the bugs are fixed, we ramp it slowly to the rest of our users.

4. Automation

Automation is done in parallel to functional testing. We use Selenium with Ruby for UI automation and Selenium with Java for mobile automation. Unit tests are also written for new features. At LinkedIn, we release a feature after it is 100% automated so the timeline is calculated accordingly.

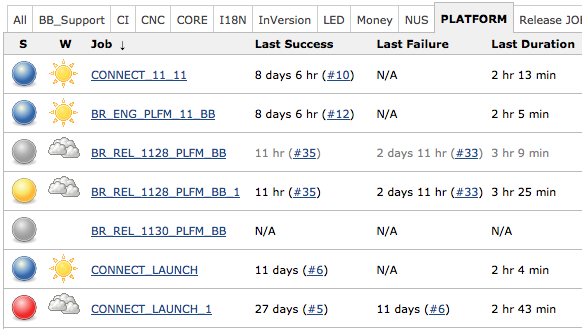

5. Regression Run and CI

We create a Continuous Integration (CI) run on Hudson and start unit test runs and the automated regression suite of tests. For a branch to pass “GO” criteria, it has to succeed in all of the above.

6. Release Branch Creation

After the feature branch is qualified, it is merged with all other feature branches to form a Release branch. Regression and Functional tests are executed on the Release branch to ensure the compatibility between the merged branches.

7. Deployment and Testing on Staging Environment

The release branch is then pushed to the staging environment. Sanity tests are executed at different steps to ensure backward compatibility of code for the applications and services being pushed. All issues found are fixed forward to ensure smooth deployment. Test engineers run a final round of regression and feature testing and developers review the logs.

8. Performance Testing

Our Performance team runs tests on JMeter to ensure the feature is working well under load. These tests are run on the Staging environment, which is 1/8th of the Production load.

9. Production Push

We keep a close watch as the code makes its way to production to ensure the code ships out the door with good quality. This is almost when we can celebrate.

10. Monitoring

Our job isn’t quite done yet. We constantly monitor logs and real-time graphs to ensure everything is working smoothly.

11. Hotfixes (if needed)

After the functionality is out on the production environment, our customer service team, product team, and engineers constantly monitor all customer feedback from our site feedback tool. If there are any issues, we try to fix them as quickly as possible, in most cases within 24 hours. This is known as hotfixing a bug.

Test engineering at LinkedIn

Our goal at LinkedIn is to make the product useful to our members and to ship products with utmost quality. As a test engineer myself, I can get a sound sleep knowing there would never be a compromise in quality at any step at LinkedIn.

Quality is never an accident; It is always the result of intelligent effort. -- John Ruskin