I'm thrilled to announce that we have finally cut off a branch and are ready to do the 0.90 open source release for Project Voldemort. For folks still enchanted by the Potter mania, no, I am not talking about Harry's nemesis. Project Voldemort is an open-source distributed key-value store being used here at LinkedIn and at various other companies. This is one of our biggest open-source releases and contains features that we have worked on and deployed at LinkedIn in the last one year.

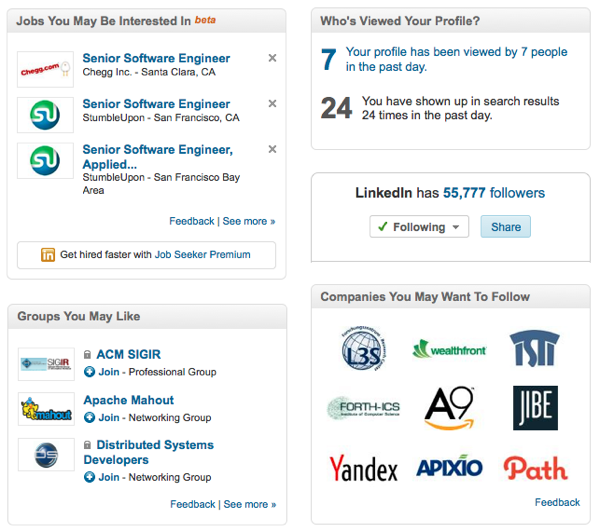

Over the past year our user base internally has grown tremendously. About 3 years ago Voldemort was deployed as a small cluster to serve our first product Who viewed my profile. Over time we have grown to multiple clusters, some of which now span over LinkedIn's two data-centers. At its crux a single cluster serves either one of the two types of applications - those dealing with read-only static data (computed offline in Hadoop after some number crunching) or read-write data. Now with around 200 stores overall in production we have been serving various critical user facing features. Some examples of applications that use us include People you may know, Jobs you may be interested in, Skills, LinkedIn Share Button, Referral Engine, Company Follow, LinkedIn Today and more. With new users ramping in nearly every other week our clusters have collectively started doing over a billion queries per day.

What's new?

One of the most important upgrades we have done in production recently has been switching all our clients and servers from the legacy thread-per-socket blocking I/O approach to the new non-blocking implementation which multiplexes using just a fixed number of threads (usually set in proportion to the number of CPU cores on the machine). This is good from an operations perspective on the server because we no longer have to manually keep bumping up the maximum number of threads when new clients are added. From the client's perspective we now won't need to worry about thread pool exhaustion due to slow responses from slow servers.

While upgrading the client side logic, we also redesigned the routing layer to model it as a pipelined finite state machine (we call it the pipeline routed store). For example, a put() request on the client is now modeled as a series of following states:

- Generate list of nodes to put to

- Put sequentially till first success

- Put in parallel to the rest of the nodes

- Increment vector clock.

The other important feature that we use at LinkedIn is the read-only stores pipeline. Our largest read-only stores cluster powers most of the recommendation features and fetches around 3 TB of data every day from Hadoop. Some of the initial work we did in this area was to make the fetch + swap pipeline more efficient by migrating off the old servlet based tool to a new administrative service based tool. This gave us better visibility into progress of data transfer (fetch phase) along with more control for swaps. Besides optimizing the data-transfer pipeline, we also spent some time iterating on the underlying storage format and data layout. The final storage format that we have come up with has a better memory footprint, supports iterators and has been tweaked for making rebalancing of read-only stores as simple as possible.

Besides working a lot on our Java client, we also updated some clients relying on Voldemort's server-side routing. In particular the Python and Ruby clients have gone through some iterations. Many of our internal customers use these for quick prototyping of their ideas during our monthly hackdays. In fact, one of the by-products of a recent hackday has resulted in Voldemort having a nice GUI, more about which you can read here.

What's next?

With some of the core pieces of the system in place now, our road-map is to pay more emphasis on the operation / management aspects. For example, we want to provide better tools to make 'migration' or 'rebalancing' of clusters as easy as pressing a button. Doing so would allow us to have larger clusters thereby decreasing operational overhead of maintaining many small clusters. We're also slowly changing our old philosophy of adding new clusters for every new application and instead plan to add better support for multi-tenancy.

Fork / Download Voldemort, read all about updating to the new version, find an interesting new sub-project, submit a patch and come join the Death Eaters.