Continuous Integration for Mobile

October 26, 2011

A couple months ago, LinkedIn launched a brand new mobile experience on a wide variety of platforms, including native apps and HTML5 webapps. Under the hood, we made heavy use of JavaScript and HTML 5 on the client-side and Node.js on the server-side. To be able to develop, test, and release rapidly, we built an automated pipeline of continuous integration. In this post, I'll tell you how LinkedIn's mobile CI works.

Overview

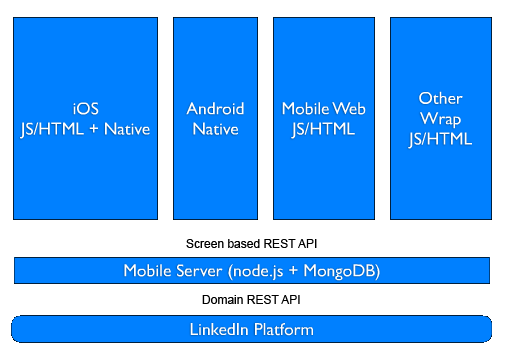

Here's a high level overview of the architecture for LinkedIn Mobile:

The various mobile platforms we support (iPhone, Android, mobile web) mostly consist of JavaScript and HTML apps making RESTful requests to our Node.js mobile server. This server, in turn, fetches data via RESTful calls to the LinkedIn Platform. You can read more about our mobile architecture here and here.

The continuous integration pipeline

Our mobile CI consists of a five stage pipeline:

- Unit tests: run in less than 10 seconds to test individual modules/units

- Fixtures tests: test solely the client apps by having them use static/mock data

- Layout tests: test the appearance of the client apps by taking screenshots and comparing them against baseline images

- Deployment: automatically deploy to a staging environment

- End-to-end tests: run end-to-end tests against the staging environment

The earlier stages are generally faster than the later stages and each stage must pass 100% before the next stage runs.

Unit tests and code coverage

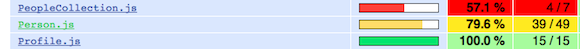

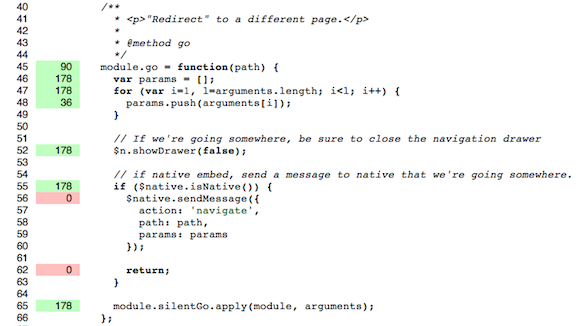

We use Hudson as our continuous integration server and have it execute unit tests after every commit. We picked JsTestDriver as it's one of the few JavaScript unit testing frameworks available that supports continuous integration. As a bonus, it also calculates code coverage:

Fixtures tests

The clients can be configured to make requests to an nginx server which returns test fixtures in the form of static JSON files. Since these tests solely deal with mock data, any failures can be categorized as client-side bugs. As an added bonus, using mock data allows these tests to execute faster.

We can also configure nginx during testing to see how the client handles a variety of HTTP status codes. You can check out some of the example nginx rules we use to ensure our client code can gracefully handle 500 errors, 204, etc.

Layout tests

The fixtures environment also allows us to run layout tests which automatically verify that the UI is rendering correctly without requiring a human to look at it. We use WebKit Layout and Rendering to take screenshots with a headless browser. When UI development is completed, the screenshots are labeled as baselines. Moving forward, we simply diff png files against the baselines to catch regressions.

The fixtures minimize random data that could cause UI changes between tests. In the few cases where the rendering is always dynamic, such as timestamps, we execute custom JavaScript to replace the dynamic data with static data just before the screenshots are taken. We have also added a tolerance to the png comparison since in some cases, WebKit produces slightly different png files.

Deployment and end-to-end tests

After layout tests pass 100%, a production build is automatically deployed to a staging environment and we run end-to-end tests. These tests execute the full code path across our entire architecture and try to catch any integration issues that may have been missed in earlier rounds of testing. We also run the end-to-end tests continually on production, which lets us quickly identify any live site issues, such as a server going down or a load balancer problem.

We created an end-to-end test framework using Selenium WebDriver and TestNg. We've had success using iPhoneDriver to allow these tests to run against an iPhone simulator. We'd like to use AndroidDriver, but until recently, we were blocked by an issue with CSS selectors that the WebDriver team just fixed. While Selenium-WebDriver tests can be written in a variety of languages, we went with Java as it's the most widely supported. Check out this gist to see a few example Selenium-WebDriver tests.

We use JSCoverage to analyze the code coverage of our end-to-end tests:

Wrapping up

Through all the stages of the mobile CI, we've achieved an overall code coverage of 75%, which has allowed us to catch a huge number of bugs, especially early in the development cycle with unit tests and fixtures tests. The turnaround for these tests is fast: bugs are usually caught less than 5 minutes after new code has been committed. The end-to-end tests have also been instrumental in detecting the few issues that slip into later stages of development, catching bugs in staging and production.

In the future, as the team gets closer to test driven development (TDD), we should be able to add a final stage to the pipeline: deploying to production. That would close the loop and achieve continuous deployment.