Optimizing Linux Memory Management for Low-latency / High-throughput Databases

October 7, 2013

Co-author: Cuong Tran

Table of Contents

- Introduction

- Setting up the context

- Reproducing and understanding Linux's zone reclaim behavior

- NUMA memory rebalancing also triggers direct page scans

- Lessons learned

Introduction

GraphDB is the storage layer of LinkedIn's real-time distributed social graph service. Our service handles simple queries like the first and second degree networks of LinkedIn members as well, as more sophisticated queries like the graph distances between members, paths between members, etc. We support multiple node and edge types and process all queries on the fly. You can get a feel for how some of LinkedIn's applications use our social graph in this blog post.

Every page view on LinkedIn generates multiple queries to GraphDB. This means GraphDB handles hundreds of thousands of queries per second with 99% of our responses having microsecond latency (typically 10's of microseconds). Consequently even 5 millisecond response latencies from GraphDB can slow down LinkedIn.com dramatically.

For most of 2013, we have seen intermittent and occasionally severe spikes in GraphDB's response latencies. We dug in to investigate these spikes, and our efforts led us deep into the details of how the Linux kernel manages virtual memory on NUMA (Non Uniform Memory Access) systems. In a nutshell, certain Linux optimizations for NUMA had severe side effects which directly impacted our latencies. We think that any online, low-latency, database system running on Linux can benefit from the findings which we share in this document: after rolling out our optimizations, we saw our error rates (ie. the proportion of slow or timed out queries) drop to 1/4th the original.

The first part of the document provides the relevant background information: an outline of how GraphDB manages its data, the symptoms of our problem, and how the Linux Virtual Memory Management (VMM) subsystem works. In the second part of the document, we will detail the methodology, observations and conclusions from our experiments in getting to the root cause of the problem. We end with a summary of the lessons we have learned.

This document should be self contained for people with knowledge of how operating systems work.

Setting up the context

1) How GraphDB manages its data

GraphDB is essentially an in-memory database. On the read side, we mmap all our data files and keep the active set in memory at all times. Our reads are spread fairly randomly through the whole data set, and we have microsecond latency for 99% of requests. A typical GraphDB host has 48GB of physical memory and consumes about 20GB of it: 15GB for the mmapped data which is off-heap, 5 GB for the JVM heap.

On the write side, we have a log-structured storage system. We divide all our data into 10 MB append-only segments. At present, each GraphDB host has around 1500 active segments, out of which only 25 are writable at any given moment. The remaining 1475 segments are read only.

Being a log structured store, we perform periodic compactions of our data. Further, since our compaction scheme is quite aggressive, we churn through about 900 data segments per day per host. That is about 9GB worth of data files per day per host even though our data footprint grows by a small fraction of that number. As a result, on a 48GB host, we can fill up the page cache with garbage in about 5 days.

2) Symptoms of the problem

The primary symptom of our performance problems were spikes in the GraphDB response latency. These spikes would be accompanied by a very high number of direct page scans and low memory efficiency as shown by sar. In particular, the 'pgscand/s' column in the sar -B output would show 1 to 5 million page scans per second for several hours with 0% virtual memory efficiency.

During the times of performance degradation, the system would be under no apparent memory pressure: we would have plenty of inactive cached pages as reported by /proc/meminfo. Also, not every spike in the pgscand/s would induce a corresponding latency spike from GraphDB.

We were puzzled two questions:

- If there is no obvious memory pressure on the system, why is the kernel scanning for pages?

- Even if the kernel is scanning for pages, why do our response latencies suffer? Only writer threads need to allocate new memory and the reader and writer thread pools are decoupled. So why would one block the other?

The answers to these questions led us to Linux's optimizations for NUMA systems. In particular, it led us to the 'zone reclaim' feature of Linux. If you don't know much about NUMA, Linux and zone reclaim, don't worry, the next section will fill you in. Armed with that information, you should be able to make sense of the rest of this document.

3) A little information on Linux, NUMA, and zone reclaim

Understanding how Linux handles NUMA architectures is crucial to understanding the root cause of our problem. Here are some excellent resources on Linux and NUMA:

- Jeff Frost: PostgreSQL, NUMA and zone reclaim mode on Linux. If you are pressed for time, this is the one you should read.

- Christoph Lameter: Non Uniform Memory Access, an overview. In particular read about how Linux reclaims memory from NUMA zones.

- Jeremy Cole: The MySQL "swap insanity" problem and the effects of NUMA architecture.

It is also important to understand the mechanism Linux uses to reclaim pages from the page cache.

In a nutshell, Linux maintains a set of 3 lists of pages per NUMA zone: the active list, the inactive list, and the free list. New page allocations move pages from the free list onto the active list. An LRU algorithm moves pages from the active list to the inactive list, and then from the inactive list to the free list. The following is the best place to learn about Linux's management of the page cache:

- Mel Gorman: Understanding the Linux Virtual Memory Manager, chapter 13: Page Frame Reclamation.

We tried turning off zone reclaim mode on our production hosts after studying these documents. Our performance improved immediately upon doing so. That's why we focused on understanding what zone reclaim does and how it affects our performance.

The rest of this post digs deep into Linux's zone reclaim behavior. If you are unfamiliar with what a zone reclaim is, then please read Jeff Frost's article linked to above at the very least.

Reproducing and understanding Linux's zone reclaim behavior

1) Setting up the experiment

To understand what triggers a zone reclaim and how zone reclamation affects our performance, we wrote a program to simulate GraphDB's read and write behavior.

We ran this program for a 24 hour period. For the first 17 hours, we ran it with zone reclaim enabled. For the last 7 hours we ran it with zone reclaim disabled. The program ran uninterrupted through the full 24 hour period. The only change to the environment was disabling zone reclaim by writing '0' to /proc/sys/vm/zone_reclaim_mode at the 17 hour mark.

Here is what the program did:

- It mmapped 2500x10MB of data files, read through them, and then unmapped them so as to fill the Linux pagecache with garbage. This would get the system into a state similar to what a GraphDB host would be after a few days of uptime.

- A set of reader threads mmapped another set of 2500x10MB files and read portions of them at random. These 2500 files comprised the active set. This simulated the GraphDB read behavior.

- A set of writer threads continuously created 10MB files. Once a writer filled a file, it would pick a random file from the active set, unmap it, and then replace it with the newly created file. This simulated the GraphDB write behavior.

- Finally, if a reader thread took more than 100ms to complete a read, it would print out the usr, sys, and elapsed time for that particular access. This enabled us to keep track of the degradation in read performance.

We ran this program on a host with 48GB of physical memory. Our working set was about 25GB, and there was nothing else running on the system. So we did not put the host under any real memory pressure.

2) Understanding what triggers zone reclaim

When a process requests a page, the kernel checks whether its preferred NUMA zone has enough free memory and if more than 1% of its pages are reclaimable. The percentage is tunable and is determined by the vm.min_unmapped_ratio sysctl. Reclaimable pages are file backed pages (ie. pages which are allocated through mmapped files) which are not currently mapped to any process. In particular, from /proc/meminfo, the 'reclaimable pages' are 'Active(file)+Inactive(file)-Mapped' (source).

How does the kernel determine how much free memory is enough? The kernel uses zone 'watermarks' (source) which are determined through the value in /proc/sys/vm/min_free_kbytes (source). They are also determined by /proc/sys/vm/lowmem_reserve_ratio value (source). The computed values on a given host can be found from /proc/zoneinfo under the 'low/min/high' labels as seen below:

Node 1, zone Normal

pages free 17353

min 11284

low 14105

high 16926

scanned 0

spanned 6291456

present 6205440

The kernel reclaims pages when the number of free pages in a zone falls below the low water mark. The page reclaim stops when the number of free pages rise above the 'low' watermark. Further, these computations are per-zone: a zone reclaim can be triggered on a particular zone even if other zones on the host have plenty of free memory.

Here is a graph that demonstrates this behavior from our experiment. Some points worth noting:

- the black line denotes page scans on the zone and is plotted against the y-axis on the right.

- the red line denotes the number of free pages in the zone.

- the 'low' watermark for the zone is in green.

We observed similar patterns on our production hosts. In all cases, we see that the page scan plot is virtually a mirror image of the free pages plot. In other words, Linux predictably triggers zone reclaims when the free pages fall below the 'low' watermark of the zone.

3) Characteristics of systems experiencing zone reclaims

Having understood what triggers a zone reclaim, we shifted focus to understanding how zone reclaims would affect performance. To do so, we collected the information from the following sources every second for the duration of the experiment.

/proc/zoneinfo/proc/vmstat/proc/meminfonumactl -H

After plotting all the data in all these files and studying the patterns, we found the following characteristics most interesting for explaining how zone reclaim would impact read performance negatively.

Our first observation was that, with zone reclaim enabled, Linux performed mostly direct reclaims (ie. reclaims performed in the context of application threads and counted as direct page scans). Once zone reclaim was disabled, the direct reclaims stopped, but the number of reclaims performed by kswapd increased. This would explain the high pgscand/s we observed from sar:

Secondly, even though our read and write behavior did not change, the number of pages in Linux's active and inactive list showed a significant change once zone reclaim was disabled. In particular, with zone reclaim enabled, Linux retained about 20GB worth of pages in the active list. With zone reclaim disabled, it retained about 25GB of pages in the active list, which is the size of our working set:

Thirdly, we observed that there was a significant difference in the page-in behavior when zone reclaim was disabled. In particular, while the rate of page-outs to disk stayed the same as expected, the rate of page-ins from disk reduced significantly with zone reclaim disabled. The rate of major faults changed in an identical manner as the page-in rate. The graph below illustrates this observation:

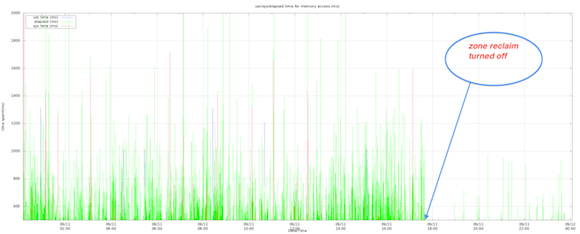

Finally, we observed that the number of expensive memory accesses in the program dropped significantly once we disabled zone reclaim mode. The graph below shows the memory access latency in milliseconds, along with the amount of time spent in system and user CPU. We see that the program spent most of its time waiting for I/O while occasionally being blocked in system CPU.

4) How zone reclaim impacts read performance

Based on the evidence above, it seems that the direct reclaim path triggered by zone reclaim is too aggressive in removing pages from the active list and adding them to the inactive list. In particular, with zone reclaim enabled, active pages seem to wind up on the inactive list, and then are subsequently paged out. Consequently, reads suffer a higher rate of major faults, and hence are more expensive.

To exacerbate the problem, the shrink_inactive_list function which is part of the direct reclaim path seems to acquire a global spinlock on the zone (source), preventing all other threads from modifying the zone for the duration of the reclaim. Hence we see occasional spikes in system CPU in the reader threads, presumably when multiple threads are contending for this lock.

NUMA memory rebalancing also triggers direct page scans

We have just seen than zone reclaims trigger direct page scans, and that these scans page out active data causing a degradation of read performance. Apart from zone reclaim, we found that a RedHat feature called Transparent HugePages (THP) can also trigger direct page scans while trying to 'rebalance' memory in NUMA zones.

With the THP feature, the system transparently allocates 2MB 'huge pages' for anonymous (non file-backed) memory. This increases the hit-rate on the TLB and reduces the size of the page tables in the system. RedHat says THP can result in a performance boost of up to 10% for certain work loads.

Additionally, since this feature is meant to work transparently, it also comes with code to split huge pages into regular pages (counted as thp_split in /proc/vmsat) and compact regular pages into huge pages (counted as thp_collapse).

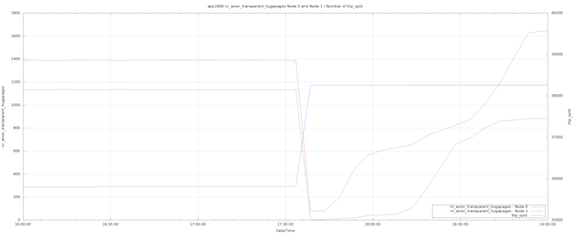

We have seen thp_split activity correlate with a high rate of direct page scans even when there is no memory pressure on a zone. In particular, we have seen Linux split the huge pages of our 5GB Java heap in order move them from one NUMA zone to another. This can be seen in the graph below: approximately 5GB worth of huge pages from both NUMA zones are split, and some of them are moved from Node 1 to Node 0. This activity correlated with a very high rate of direct page scans.

We cannot reproduce this behavior in our experimentation, and it does not seem to be such a common cause of direct page scans. However, we have enough data to suggest that Transparent HugePages do not play nice with NUMA systems and hence have proceeded to disable it on our RHEL machines by running the following command:

-

echo never > /sys/kernel/mm/transparent_hugepage/enabled

Lessons learned

1) Linux's NUMA optimizations don't make sense for typical database workloads

Databases whose main performance gains come from caching a large amount of data in memory don't really benefit from NUMA optimizations. In particular, the performance gain that comes from saving a trip to the disk far outweighs the performance gains from keeping memory local to a particular socket on a multi-socket system.

Linux's NUMA optimizations can be effectively disabled by doing the following:

- Turn zone reclaim mode off: add

vm.zone_reclaim_mode = 0to/etc/sysctl.confand runsysctl -pto load the new settings. - Enable NUMA interleaving for your application: run with

numactl --interleave=all COMMAND

These are now the defaults on all our production systems.

2) Don't take Linux for granted: manage the garbage in your page cache yourself

Since GraphDB's log structured storage system did not reuse its data segments, we created a lot of garbage in Linux's page cache over time. It turns out that Linux is quite bad at cleaning up this garbage properly: it often throws the baby out with the bath water, ie. it pages out active data. This causes our read performance to suffer due to a higher number of major faults. Both the direct reclaim path and the kswapd path are guilty of this, but the problem is much more pronounced with direct reclaims.

We have added a segment pool to our storage system so that we reuse segments. This way we create fewer files and put less pressure on the Linux page cache. Our initial testing with this segment pooling has shown very encouraging results.

Parting thoughts

Ever since we suspected that zone reclaim was a problem, we immediately turned the setting off in production. The results have been dramatic. Have a look at our median response latencies from production hosts for the past 4 months. No prizes for guessing when we turned off zone reclaim. On small setting for Linux, one dramatic performance improvement for LinkedIn!

Acknowledgements

Davi Arnaut provided a some of the insights and knowledge presented here. Josh Walker also helped guide the investigations and was a great support.

Update: the original version of this post said our error rates dropped by 400% after we rolled out our optimizations. We meant that our error rates dropped to 1/4th the original. The post has been updated to reflect this.